Correctly Dealing with Outliers (Part One)

In a recent post, I described a recent paper in psychology that featured inflated false-positive rates for two reasons:

- Some outliers which should not have been excluded (judging from the pre-registration) were excluded.

- The outliers were excluded within conditions, rather than across the data.

This discovery encouraged me to do more research on the topic, and to write a paper on outlier exclusions, which was recently accepted for publication.

You can read the paper in full here if you are curious, otherwise here are five highlights from the paper:

1. By-condition exclusions are relatively common, and the literature has insufficiently discouraged this practice

To assess the prevalence of this practice, I searched the Journal of Experimental Psychology: General for the all papers published since 2019 including the keyword “outlier”. This search returned 43 papers, 31 of which included a univariate exclusion procedure. Out of those 31 papers, 9 of them incorrectly excluded outliers by condition.

I also discovered that the most cited papers on the topic of outlier exclusions typically do not discuss the level at which outliers should be excluded (i.e., within vs. across conditions). In fact, the only two papers I found that do discuss this issue make the incorrect recommendation that outliers should be excluded within conditions. The details of this literature surveys are available in the paper, and on the OSF repository of the project.

2. Excluding outliers by condition is a paradox, and violates the logic of null-hypothesis testing

To determine if a treatment has an effect, researchers commonly engage in null-hypothesis significance testing (NHST): They compare the observed impact of their treatment to what would be expected if the treatment did not have any effect (the null hypothesis).

Take the t-test for instance: It compares the observed difference in means between two groups to the differences that would be expected when two groups are independently sampled at random from a common normal distribution. If the observed difference is much more extreme than what this null distribution would predict, researchers typically “reject the null,” and conclude that the treatment had an effect.

How is this connected to outlier exclusions? Well, when researchers choose to exclude outliers within conditions (rather than across the data), they operate under the assumption that the two conditions are different from each other… and have therefore implicitly rejected the null hypothesis. But if they have already accepted that the null hypothesis is not true, how can they then interpret a statistical test that assumes that the null is true?

3. Excluding outliers by condition will amplify any random difference originally present between the conditions

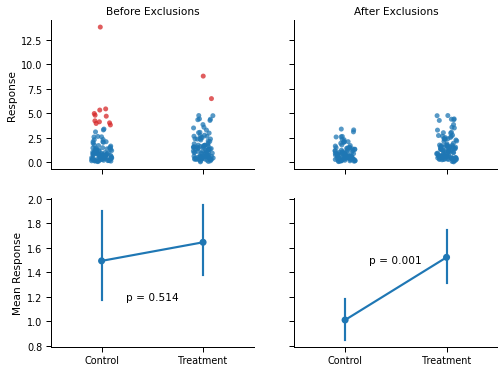

This paradox is not without consequences. Consider the figure below: The two groups were drawn from the same distribution but, by chance, the values in the “Control” condition are slightly lower than the values in the “Treatment” condition. The panel on the left shows the conclusion that a researcher will reach when comparing the two groups with a t-test.

Now, the right panel shows what would happen if the researcher decided to exclude outliers within conditions before running the statistical test. Because of the minute difference between conditions, the same high values are flagged as outliers (red dots) by the boxplot in the “Control” condition, but not in the “Treatment” condition. As a consequence, the difference between the two conditions became much larger, and statistically significant (p < .001).

4. Excluding outliers by condition will routinely lead to unacceptable increases in Type I errors

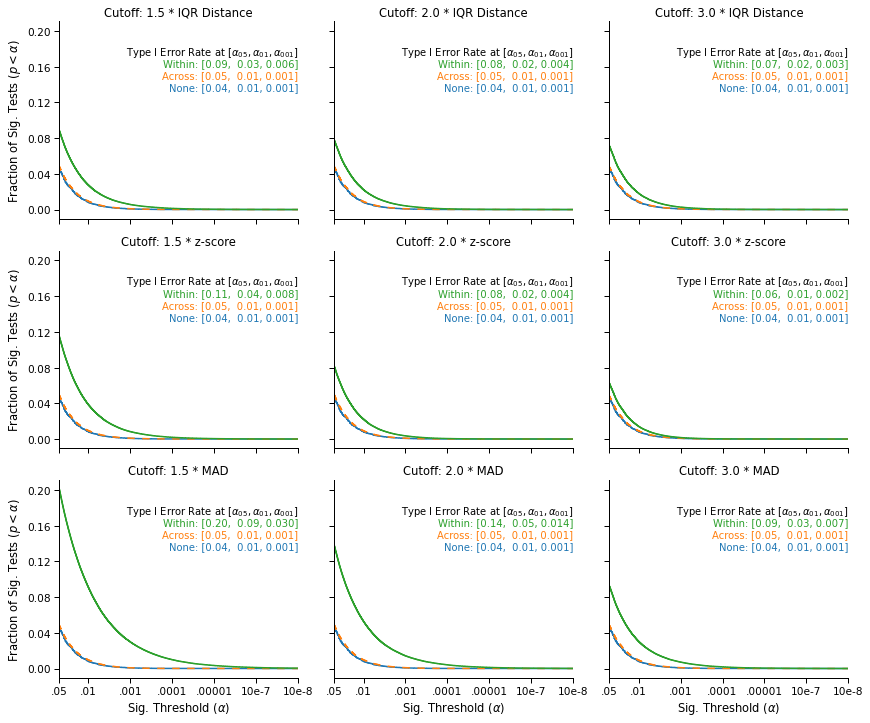

This example is not cherry picked. To show the general impact of by-condition exclusions, I simulated a large number of “experiments” conducted over different types of data, using different statistical tests, using different outlier exclusion cutoffs (IQR distance, z-score, and Median Absolute Difference) and thresholds (1.5, 2 or 3 times the IQR distance/z-score/Median Absolute Difference).

The full details are in the paper, but here is an overview of the results:

This figure displays the survival curves of the tests: The fraction of tests that were significant (on the y-axis) at a given significance threshold (on the x-axis), under different outlier exclusion cutoffs (panels) and different outlier exclusion strategies (lines).

We see that when no outliers are excluded (blue), or when the outliers are excluded across the data (orange, on top of the blue line), the false-positive rates that we observe are nominal: 5% of tests are significant at α = .05, 1% are significant at α = .01, and .1% are significant at α = .001.

In contrast, we observe an increase in false-positive rates when applying the exclusion cutoff within conditions (green line). The simulations show that the increase is systematic and serious, and that it varies significantly across exclusion cutoffs: The most favorable case shows a 20% increase in the false-positive rate (from 5% to 6%), and the least favorable case shows a 400% increase (from 5% to 20%). In general, we see that the less stringent the cutoff, the more serious the inflation in false-positive rates: Lower cutoffs increase the number of values excluded within each condition, which further amplifies the original differences between the two samples.

This is bad news, but is there any chance that the simulations overstate the problem? Wouldn’t it be less severe in real data?

5. The problem is also observed in real data, and is further compounded when exclusions are performed “iteratively”

In this section, I will use the data of the paper that I previously blogged about.

First, the “anomalous exclusions” that I described in the original post turned out to have a benign, if not unusual, explanation: The outliers were “iteratively” excluded within conditions. Any observation with a score lower than “three times the interquartile range below the lower quartile” of its condition was removed from the data, and this procedure was recursively applied again within each condition, until no new outliers are found. Since the pre-registration did not mention this procedure, I originally believed that some outliers should not have been removed from the data.

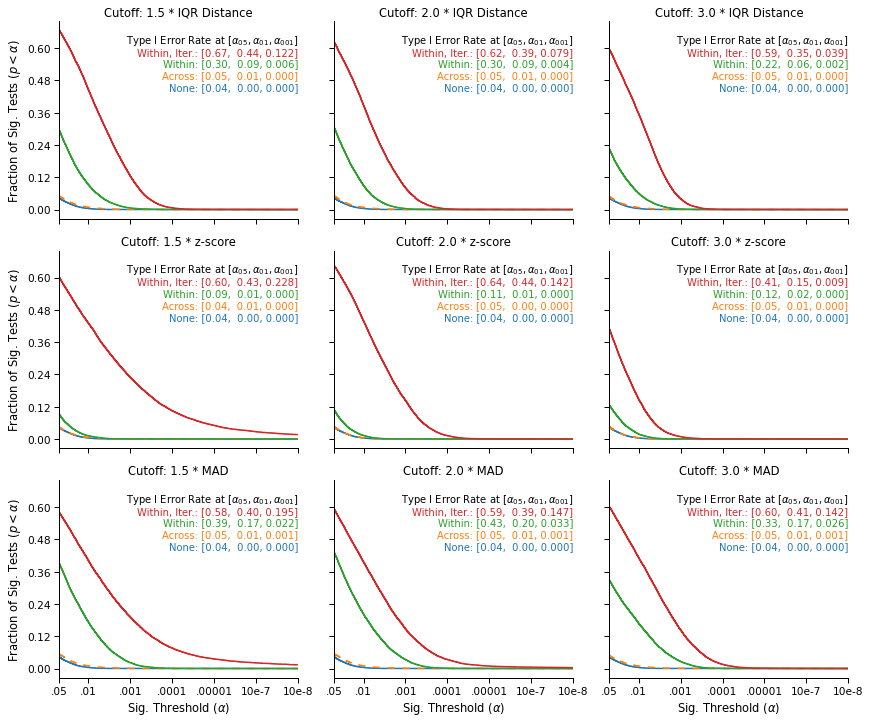

Now, what would be the impact of such “iterated exclusions”? The figure below provides an estimate of the false-positive rates that one would observe under various outlier exclusion procedures, in the context of this data. Again, the methodological details are available in the paper.

This figure replicates the Type I error inflation previously observed in simulated data. While outlier exclusions are not associated with higher false-positive rates when they are performed across the data (the orange line, on top of the blue line), the likelihood of a false-positive result increases sharply when outliers are excluded within conditions (the green line): It is always higher than 9% and can be as high as 43%.

Finally, the increase is even stronger when the outliers are iteratively excluded within conditions (the red line): Such iterated exclusions are causing the two conditions to further diverge, and routinely lead to differences that the theoretical null distribution would consider extremely unlikely. In particular, the upper right panel show that the exclusion strategy and cutoff reported in the paper is associated with a false-positive rate of 59%, which translates into a false-positive rate of 29.5% for the directional hypothesis that was pre-registered.

Note that it does not mean that the hypothesis of the paper is false, or that the paper is a false-positive. It only confirms that within-condition exclusions can lead to unacceptably high false-positive rates (even in real, non-simulated data), particularly when the exclusions are performed iteratively.

In the second part of this blog post, I will discuss a more general result of the paper: Any outlier exclusion procedure that is not blind to the hypothesis that researchers want to test may result in inflated false-positive rates.